With the abundance of Light Detection And Ranging(LIDAR) sensor data and the expanding self driving car industry carrying out accurate object detection is central to improve the performance and safety of autonomous vehicles. However point cloud data is highly sparse and this sparsity varies from one LIDAR to another and there is a need to study how adaptable object detection algorithms to data from different sensors. Due to the expensive nature of high end LIDAR this work aims to explore if a a network trained using a standard dataset like KITTI could be used for inference with a point cloud data from a 2D LIDAR that is modified to work as a 3D LIDAR. Additionally we also aim to explore if the average precision varies when the input fed to the network changes. We make use of Complex YOLO architecture which is a state of the art real-time 3D object detection network to train the KITTI dataset.

The contributions of the work is two fold :

- Generate a single birds' eye view and 2D point map of point cloud data for KITTI dataset and feed that as input to the Complex YOLO network to perform object detection on evaluate which of the two inputs perform better

- Evaluate the possibility of using a low cost 2D LIDAR as an alternate to 3D LIDAR

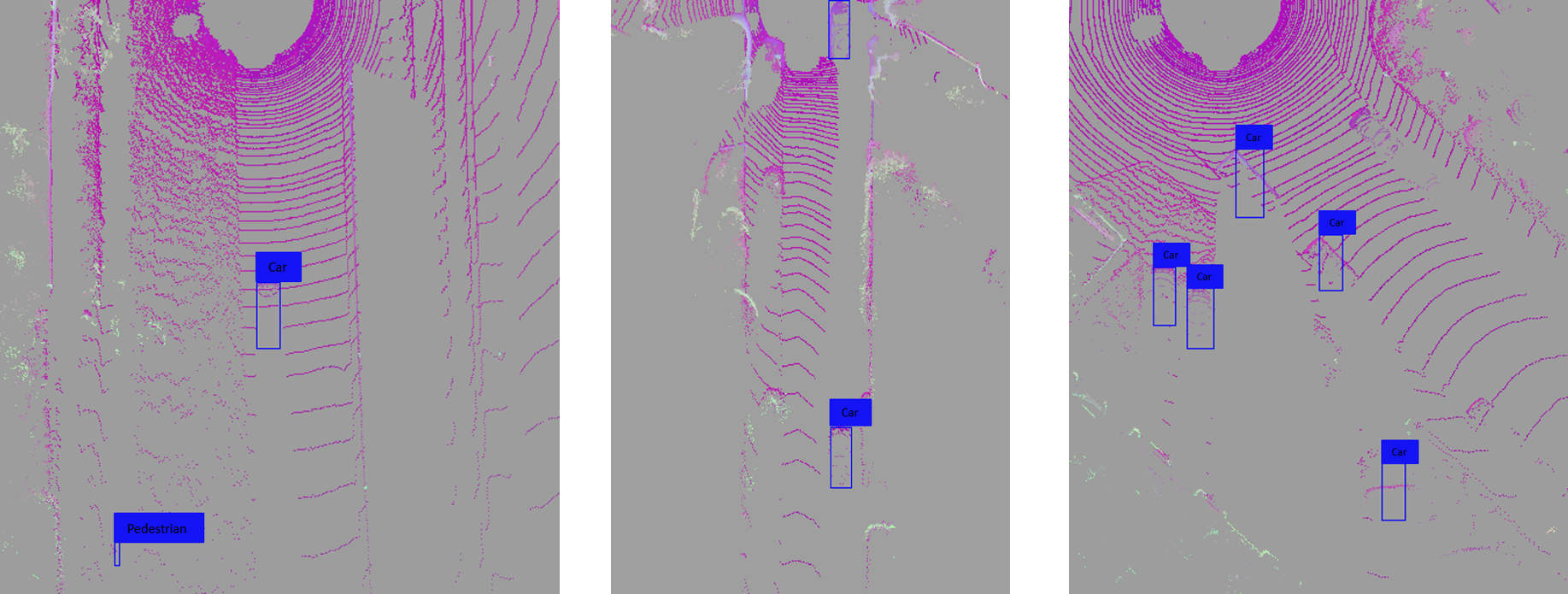

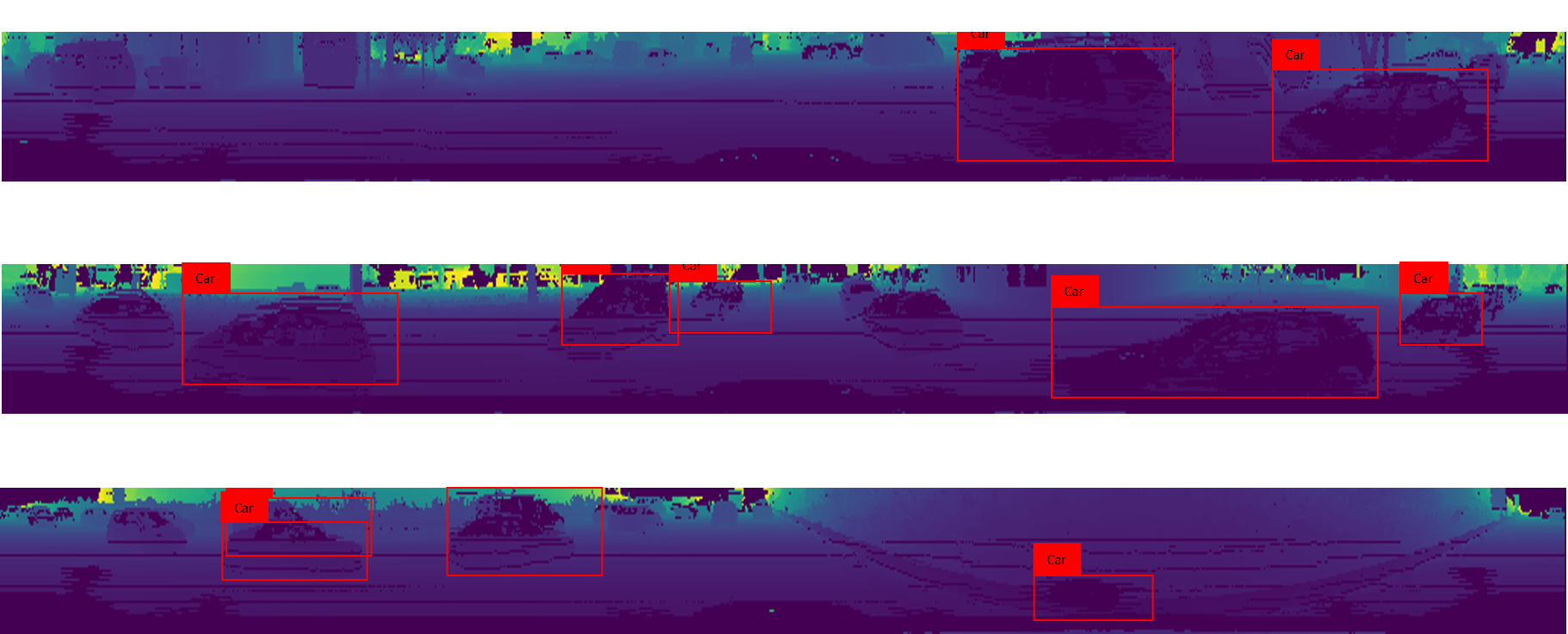

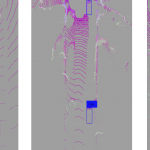

Results on performing object detection on the KITTI dataset when the 3D point cloud data is converted to a 2D birds eye view

and fed as input to the Complex YOLO architecture. Some bounding boxes are not oriented properly over the cars

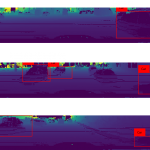

Results on performing object detection on the KITTI dataset when the 3D point cloud data is converted to a 2D panaroma view

and fed as input to the Complex YOLO architecture. Numerouisr cars, pedestrians are left undetected by the network

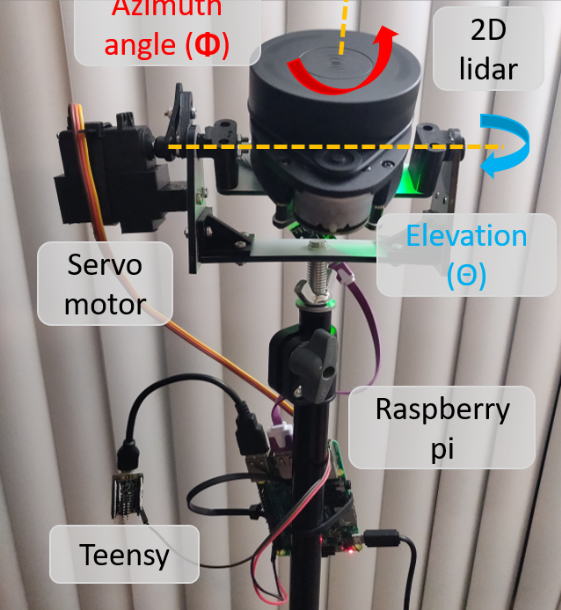

2D LIDAR SETUP

In order to obtain 3D point cloud data on any environment a low cost 2D RPlidar A1 was used. This 2D lidar can perform a 360-degree scan of the environment about a single plane. However, in order to obtain a 3D point cloud data, the lidar setup was attached to an external setup to sweep the environment. This addon setup consists of 3D printed attachments added to the 2D lidar which enables it to rotate about an axis which provides the elevation angle. The 2D lidar gives two measurable reading one being azimuth angle and other being the depth of the reflected surface for the azimuth angle measured at any instant. This is supported by a third parameter the elevation angle which is facilitated by the modified setup and it is driven by servo motor to obtain precise angles. The servo motor is connected to a teensy micro-controller which is in turn connected to raspberry pi 3 through a USB port. The reason for using teensy over raspberry pi to control the servo lies on the fact that raspberry pi does not possess any analog GPIO pins making the control of servo units through the available digital pins which uses a PWM based system to drive the system making it less accurate and prone to jitters in the servo system comprising the overall efficiency and accuracy of the system. In order to overcome this issue teensy microcontroller was used which has good analog pins to drive the servo system. The servo system was driven at 1-degree steps from a range of -30 to 70 degrees to obtain only the region of interest (done similar to velodyne lidar). Finally, the data obtained is sent to the raspberry pi through usb port which in turn is send to the host pc using ad-hoc network. This enables the user to wirelessly control the servo system. However, it is important to note that this 2D modified 3D point cloud lidar does not support real-time 3D point cloud data collection and works only on static scenarios.

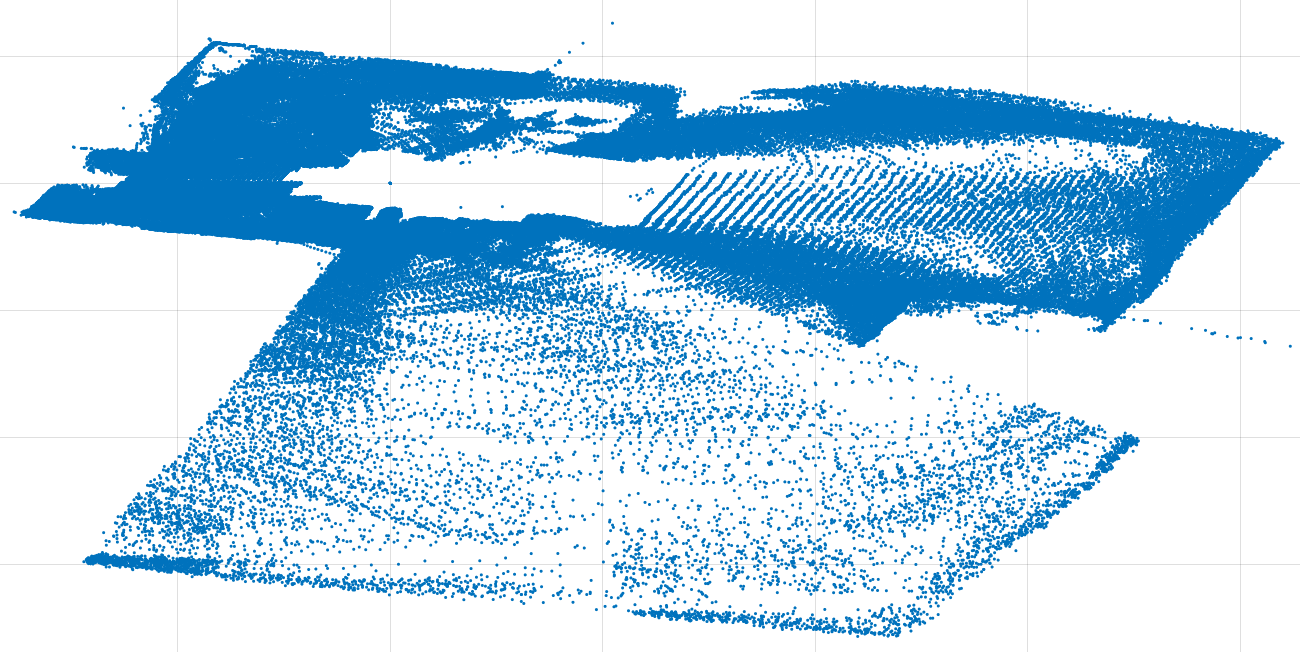

SAMPLE DATA COLLECTED

This XYZ point cloud data is plotted using scatter plot in MATLAB. In order to get more visual sense of the depth RGB map is also added to the point cloud as shown in figure

GALLERY

-

- Results on performing object detection on the KITTI dataset when the 3D point cloud data is converted to a 2D panaroma view and fed as input to the Complex YOLO architecture. Numerouisr cars, pedestrians are left undetected by the network

-

- Results on performing object detection on the KITTI dataset when the 3D point cloud data is converted to a 2D birds eye view and fed as input to the Complex YOLO architecture. Some bounding boxes are not oriented properly over the cars